Note

Go to the end to download the full example code.

Convert a pipeline with a LightGBM regressor¶

The discrepancies observed when using float and TreeEnsemble operator (see Issues when switching to float) explains why the converter for LGBMRegressor may introduce significant discrepancies even when it is used with float tensors.

Library lightgbm is implemented with double. A random forest regressor with multiple trees computes its prediction by adding the prediction of every tree. After being converting into ONNX, this summation becomes \(\left[\sum\right]_{i=1}^F float(T_i(x))\), where F is the number of trees in the forest, \(T_i(x)\) the output of tree i and \(\left[\sum\right]\) a float addition. The discrepancy can be expressed as \(D(x) = |\left[\sum\right]_{i=1}^F float(T_i(x)) - \sum_{i=1}^F T_i(x)|\). This grows with the number of trees in the forest.

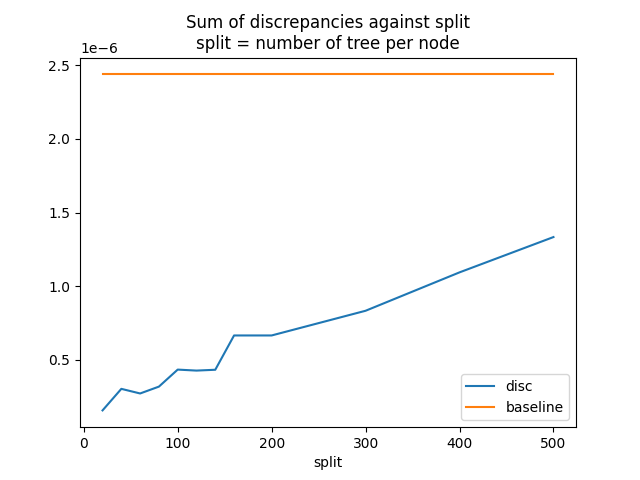

To reduce the impact, an option was added to split the node TreeEnsembleRegressor into multiple ones and to do a summation with double this time. If we assume the node if split into a nodes, the discrepancies then become \(D'(x) = |\sum_{k=1}^a \left[\sum\right]_{i=1}^{F/a} float(T_{ak + i}(x)) - \sum_{i=1}^F T_i(x)|\).

Train a LGBMRegressor¶

import packaging.version as pv

import warnings

import timeit

import numpy

from pandas import DataFrame

import matplotlib.pyplot as plt

from tqdm import tqdm

from lightgbm import LGBMRegressor

from onnxruntime import InferenceSession

from skl2onnx import to_onnx, update_registered_converter

from skl2onnx.common.shape_calculator import (

calculate_linear_regressor_output_shapes,

)

from onnxmltools import __version__ as oml_version

from onnxmltools.convert.lightgbm.operator_converters.LightGbm import (

convert_lightgbm,

)

N = 1000

X = numpy.random.randn(N, 20)

y = numpy.random.randn(N) + numpy.random.randn(N) * 100 * numpy.random.randint(

0, 1, 1000

)

reg = LGBMRegressor(n_estimators=1000)

reg.fit(X, y)

[LightGBM] [Info] Auto-choosing row-wise multi-threading, the overhead of testing was 0.000314 seconds.

You can set `force_row_wise=true` to remove the overhead.

And if memory is not enough, you can set `force_col_wise=true`.

[LightGBM] [Info] Total Bins 5100

[LightGBM] [Info] Number of data points in the train set: 1000, number of used features: 20

[LightGBM] [Info] Start training from score 0.008891

Register the converter for LGBMClassifier¶

The converter is implemented in onnxmltools: onnxmltools…LightGbm.py. and the shape calculator: onnxmltools…Regressor.py.

def skl2onnx_convert_lightgbm(scope, operator, container):

options = scope.get_options(operator.raw_operator)

if "split" in options:

if pv.Version(oml_version) < pv.Version("1.9.2"):

warnings.warn(

"Option split was released in version 1.9.2 but %s is "

"installed. It will be ignored." % oml_version,

stacklevel=0,

)

operator.split = options["split"]

else:

operator.split = None

convert_lightgbm(scope, operator, container)

update_registered_converter(

LGBMRegressor,

"LightGbmLGBMRegressor",

calculate_linear_regressor_output_shapes,

skl2onnx_convert_lightgbm,

options={"split": None},

)

Convert¶

We convert the same model following the two scenarios, one single TreeEnsembleRegressor node, or more. split parameter is the number of trees per node TreeEnsembleRegressor.

model_onnx = to_onnx(

reg, X[:1].astype(numpy.float32), target_opset={"": 14, "ai.onnx.ml": 2}

)

model_onnx_split = to_onnx(

reg,

X[:1].astype(numpy.float32),

target_opset={"": 14, "ai.onnx.ml": 2},

options={"split": 100},

)

Discrepancies¶

sess = InferenceSession(

model_onnx.SerializeToString(), providers=["CPUExecutionProvider"]

)

sess_split = InferenceSession(

model_onnx_split.SerializeToString(), providers=["CPUExecutionProvider"]

)

X32 = X.astype(numpy.float32)

expected = reg.predict(X32)

got = sess.run(None, {"X": X32})[0].ravel()

got_split = sess_split.run(None, {"X": X32})[0].ravel()

disp = numpy.abs(got - expected).sum()

disp_split = numpy.abs(got_split - expected).sum()

print("sum of discrepancies 1 node", disp)

print("sum of discrepancies split node", disp_split, "ratio:", disp / disp_split)

/home/xadupre/vv/this312/lib/python3.12/site-packages/sklearn/utils/validation.py:2691: UserWarning: X does not have valid feature names, but LGBMRegressor was fitted with feature names

warnings.warn(

sum of discrepancies 1 node 0.00012196094005948507

sum of discrepancies split node 4.600063792427767e-05 ratio: 2.651288016054185

The sum of the discrepancies were reduced 4, 5 times. The maximum is much better too.

disc = numpy.abs(got - expected).max()

disc_split = numpy.abs(got_split - expected).max()

print("max discrepancies 1 node", disc)

print("max discrepancies split node", disc_split, "ratio:", disc / disc_split)

max discrepancies 1 node 1.2361176167097199e-06

max discrepancies split node 3.5262577346983903e-07 ratio: 3.505465878305824

Processing time¶

The processing time is slower but not much.

print(

"processing time no split",

timeit.timeit(lambda: sess.run(None, {"X": X32})[0], number=150),

)

print(

"processing time split",

timeit.timeit(lambda: sess_split.run(None, {"X": X32})[0], number=150),

)

processing time no split 1.5174094659960247

processing time split 1.7237651600007666

Split influence¶

Let’s see how the sum of the discrepancies moves against the parameter split.

res = []

for i in tqdm([*range(20, 170, 20), 200, 300, 400, 500]):

model_onnx_split = to_onnx(

reg,

X[:1].astype(numpy.float32),

target_opset={"": 14, "ai.onnx.ml": 2},

options={"split": i},

)

sess_split = InferenceSession(

model_onnx_split.SerializeToString(), providers=["CPUExecutionProvider"]

)

got_split = sess_split.run(None, {"X": X32})[0].ravel()

disc_split = numpy.abs(got_split - expected).max()

res.append(dict(split=i, disc=disc_split))

df = DataFrame(res).set_index("split")

df["baseline"] = disc

print(df)

0%| | 0/12 [00:00<?, ?it/s]

8%|▊ | 1/12 [00:01<00:16, 1.53s/it]

17%|█▋ | 2/12 [00:02<00:13, 1.38s/it]

25%|██▌ | 3/12 [00:03<00:11, 1.29s/it]

33%|███▎ | 4/12 [00:05<00:10, 1.32s/it]

42%|████▏ | 5/12 [00:06<00:08, 1.28s/it]

50%|█████ | 6/12 [00:07<00:07, 1.23s/it]

58%|█████▊ | 7/12 [00:08<00:06, 1.25s/it]

67%|██████▋ | 8/12 [00:10<00:05, 1.30s/it]

75%|███████▌ | 9/12 [00:11<00:03, 1.22s/it]

83%|████████▎ | 10/12 [00:12<00:02, 1.16s/it]

92%|█████████▏| 11/12 [00:13<00:01, 1.26s/it]

100%|██████████| 12/12 [00:15<00:00, 1.21s/it]

100%|██████████| 12/12 [00:15<00:00, 1.26s/it]

disc baseline

split

20 2.431784e-07 0.000001

40 2.413315e-07 0.000001

60 2.932076e-07 0.000001

80 3.181528e-07 0.000001

100 3.526258e-07 0.000001

120 3.461343e-07 0.000001

140 3.878296e-07 0.000001

160 4.182918e-07 0.000001

200 6.583164e-07 0.000001

300 7.289334e-07 0.000001

400 5.353826e-07 0.000001

500 6.243104e-07 0.000001

Graph.

Total running time of the script: (0 minutes 21.746 seconds)